Skill-Centric

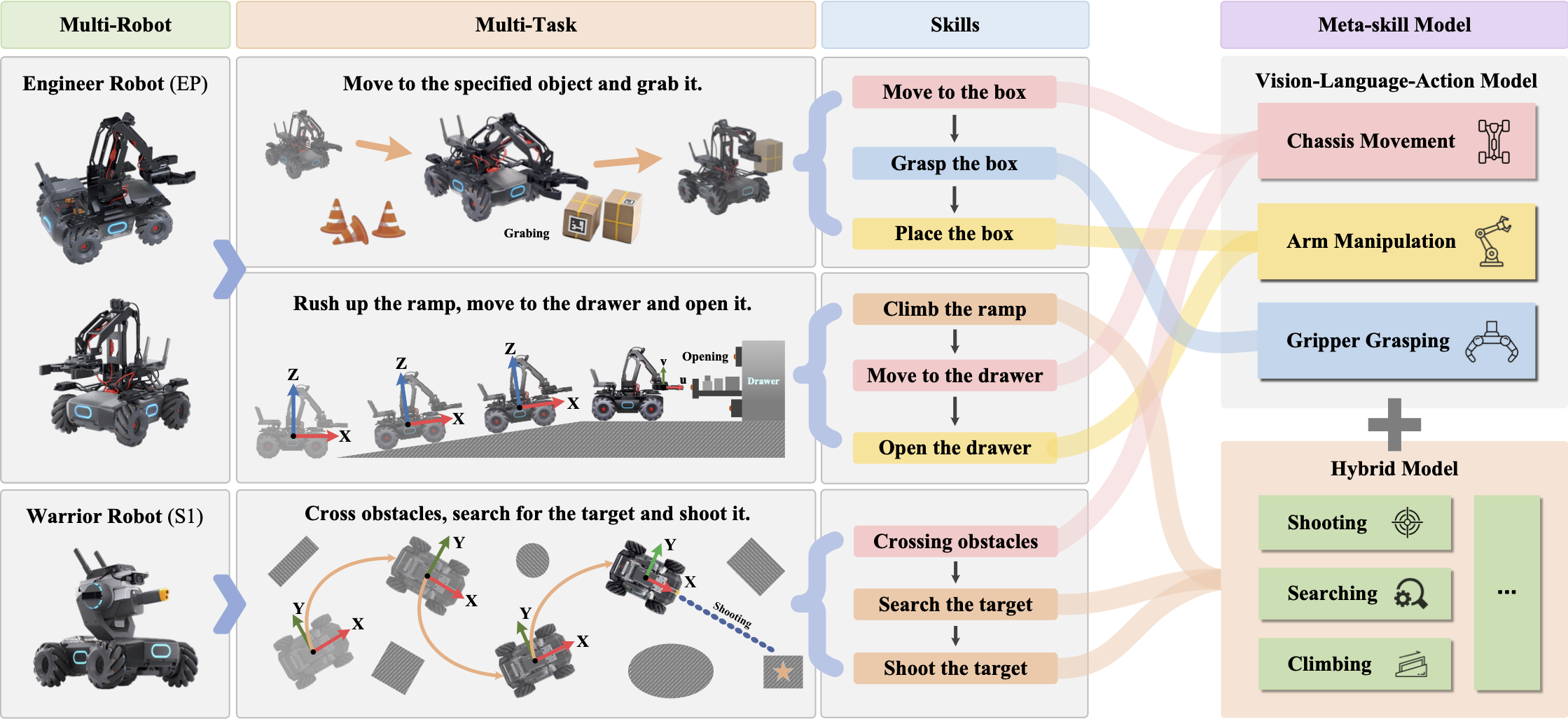

Inspiration of the skill-centric method. Robots with different modalities can perform different tasks and robots with the same modality can be used in various scenarios. We extract similar elements from the multitude of diverse robotic tasks, defining these elements as meta-skills, and store them in a skill list. Then, these skills are used to train the Vision-Language-Action (VLA) model or to construct traditional models, which can eventually lead to a skill model capable of adapting to new tasks.